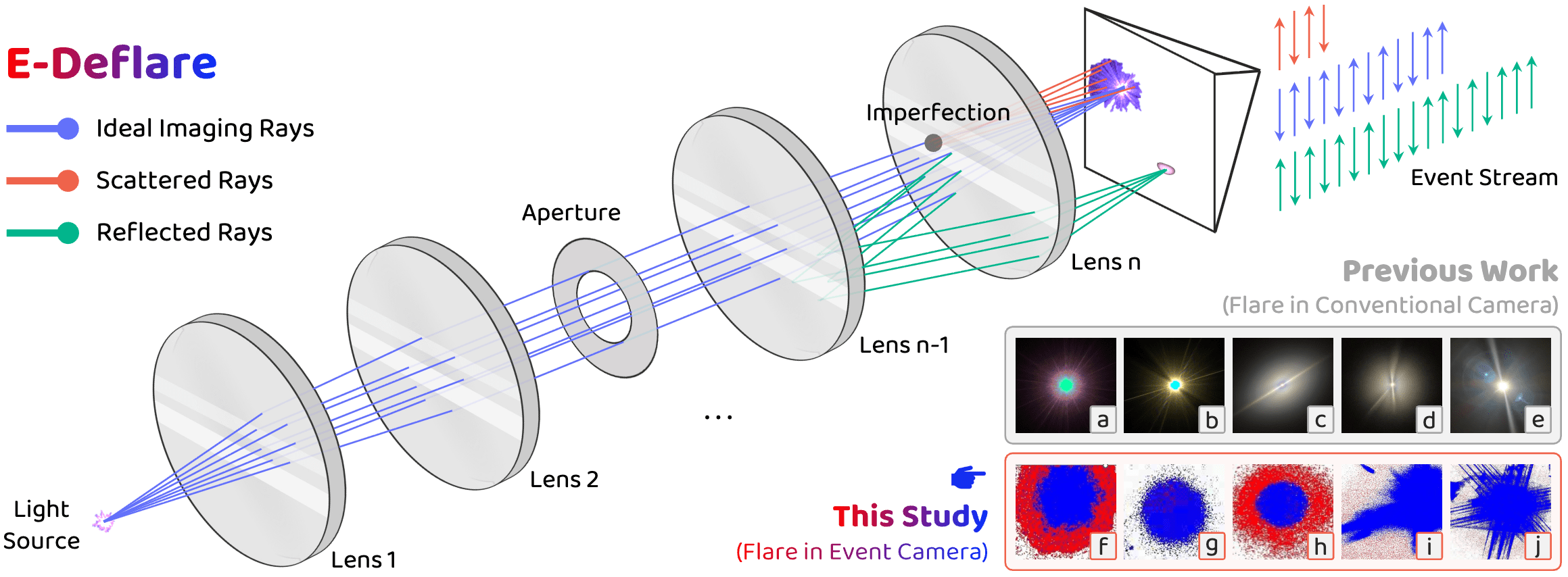

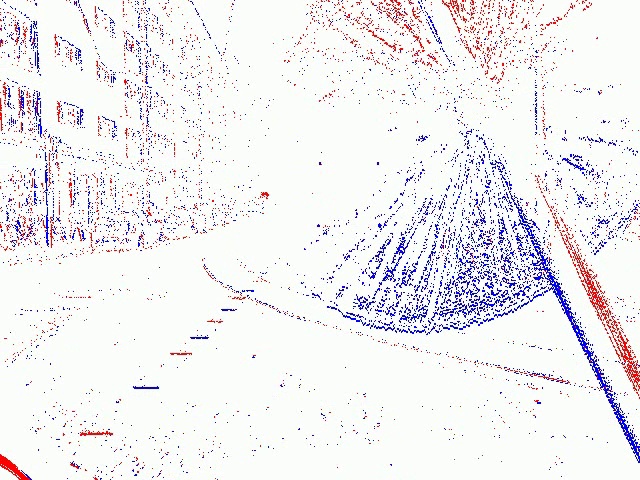

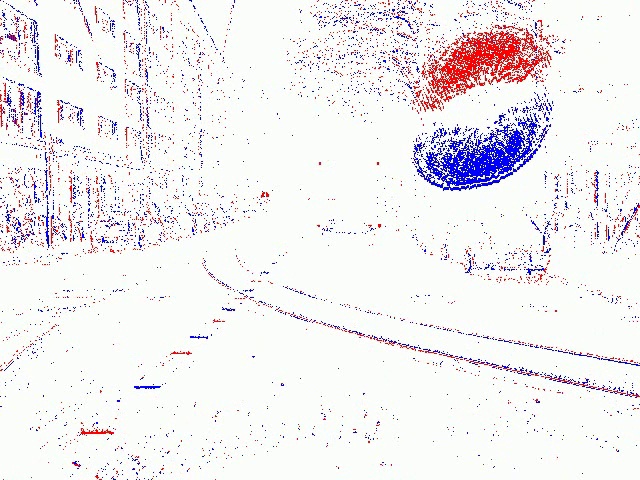

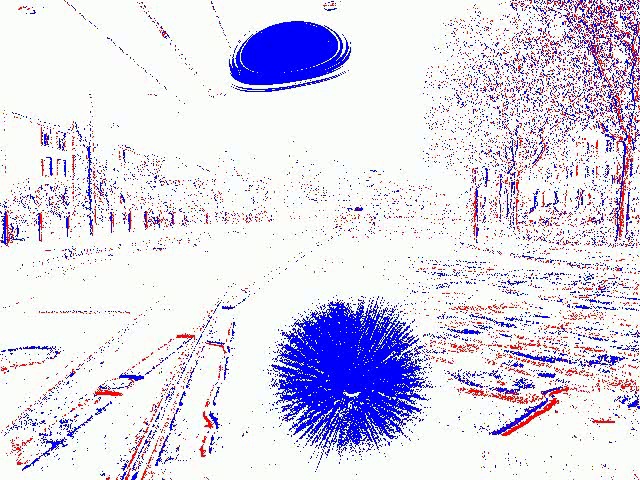

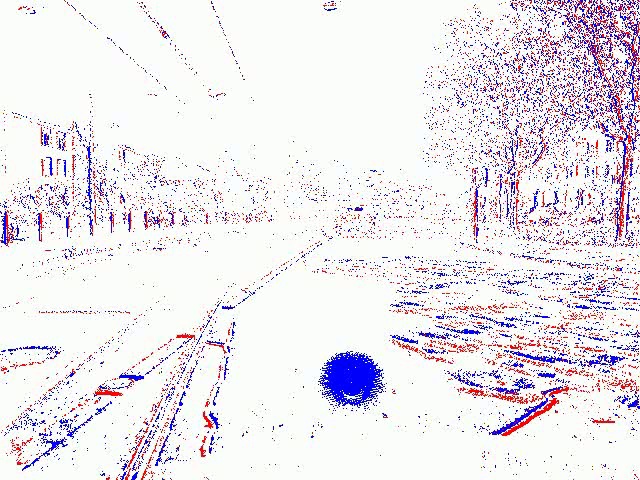

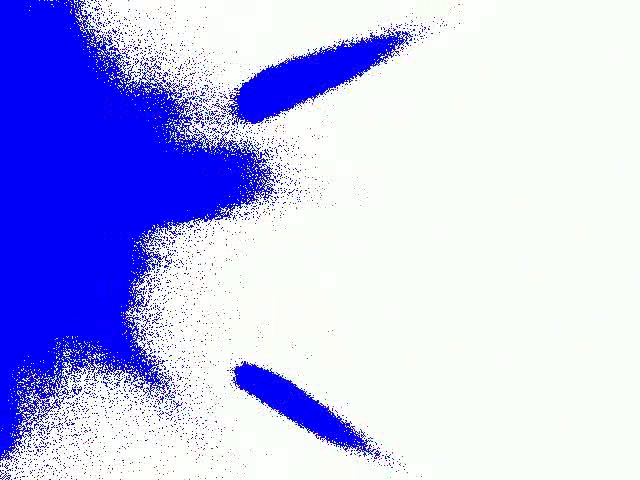

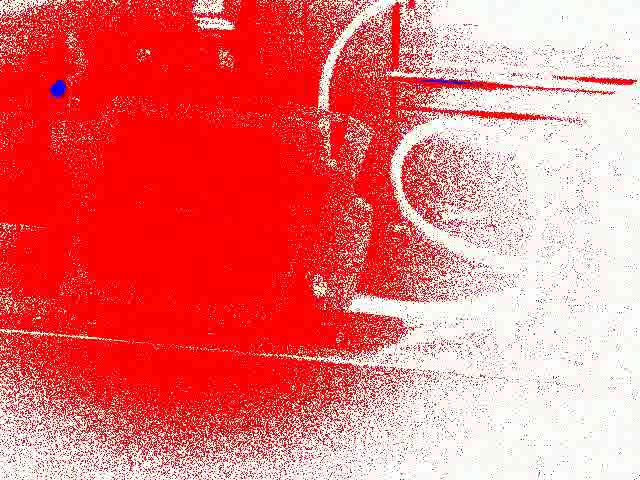

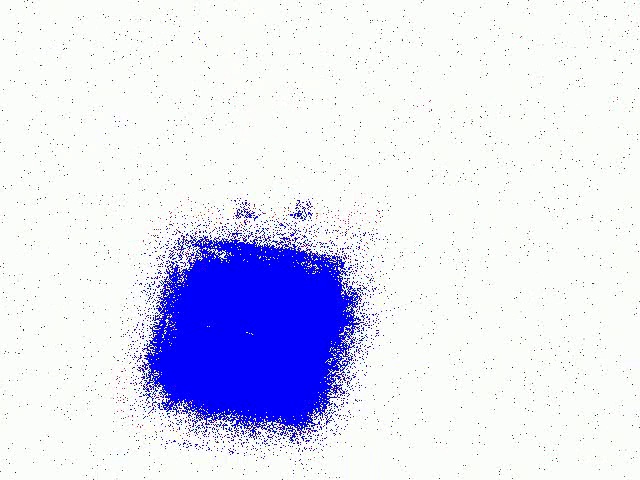

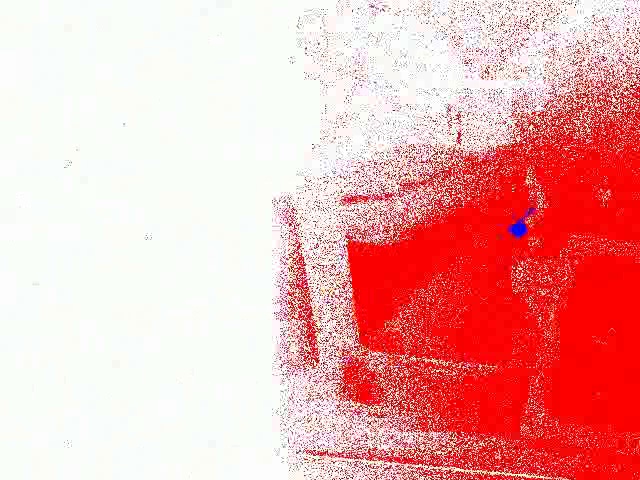

Figure. The physical principle of lens flare and its manifestation in event cameras. The central diagram deconstructs the universal optical process: ideal Imaging Rays form the scene, while Scattered Rays (from lens imperfections) and Internal Reflections superimpose flare artifacts. While lens flare is a well-studied problem in conventional imaging (bottom right, plots a to e), its complex spatio-temporal manifestation in event data has been largely overlooked (bottom right, plots f to j). Our work is the first to systematically address this challenge. The positive (brightness increase) and negative (brightness decrease) events are colored red and blue, respectively.

Event cameras have the potential to revolutionize vision systems with their high temporal resolution and dynamic range, yet they remain susceptible to lens flare, a fundamental optical artifact that causes severe degradation. In event streams, this optical artifact forms a complex, spatio-temporal distortion that has been largely overlooked.

We present the first systematic framework for removing lens flare from event camera data. We first establish the theoretical foundation by deriving a physics-grounded forward model of the non-linear suppression mechanism. This insight enables the creation of the E-Deflare Benchmark, a comprehensive resource featuring a large-scale simulated training set, E-Flare-2.7K, and the first-ever paired real-world test set, E-Flare-R, captured by our novel optical system. Empowered by this benchmark, we design E-DeflareNet, which achieves state-of-the-art restoration performance. Extensive experiments validate our approach and demonstrate clear benefits for downstream tasks.

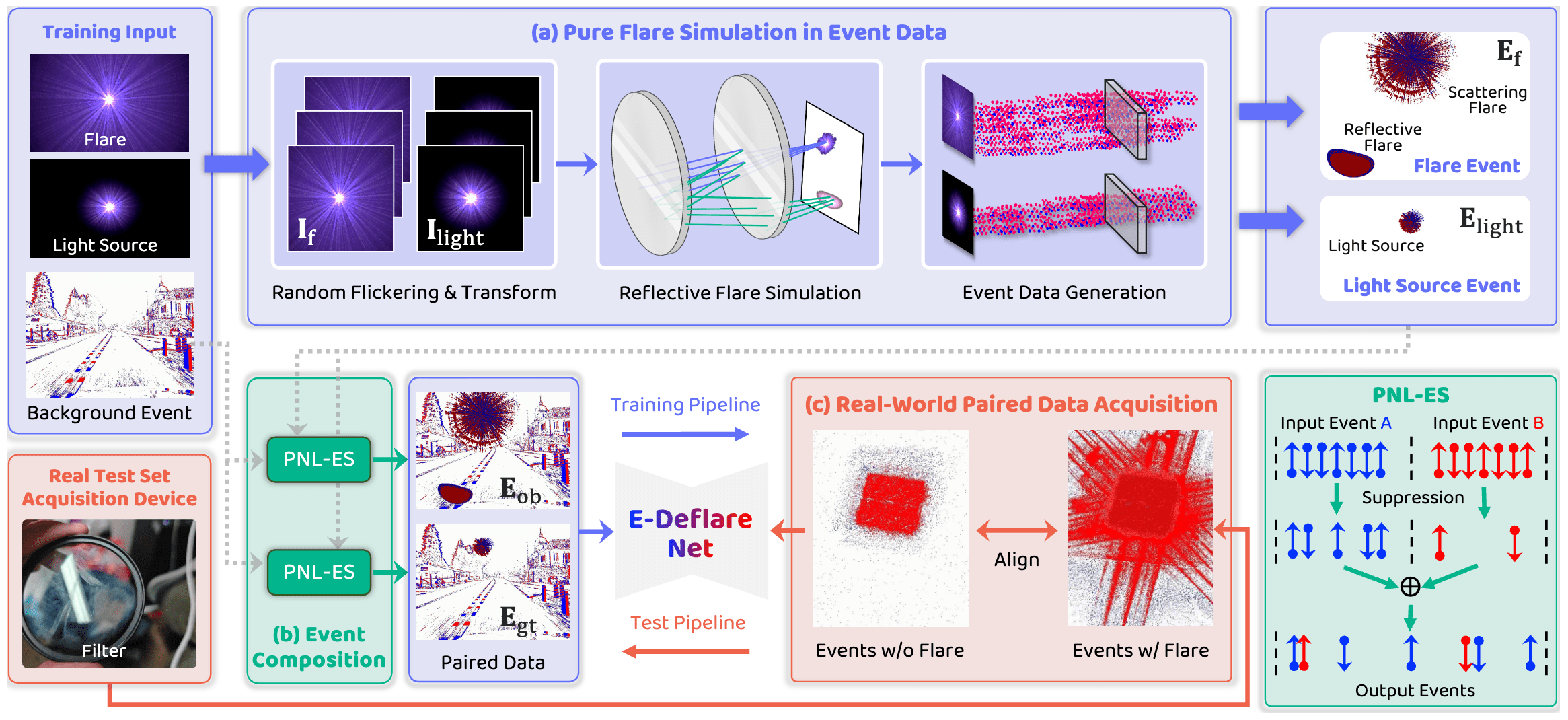

Figure. The pipeline of data generation, training, and validation. In the E-Deflare framework, we introduce two parallel pipelines to address data scarcity. The training pipeline synthesizes paired data by first generating dynamic flare events from static images (e.g., Flare7K++) and then fusing them with real background events (e.g., DSEC) via our physics-guided PNL-ES operator. The testing pipeline captures paired real-world data using a controllable optical setup. A removable filter allows us to record the same dynamic scene with flare and without flare, which forms our validation set after post-processing.

We establish and contribute the E-Deflare benchmark, comprising three new datasets:

| Dataset | Type | Split | # Samples | Description |

|---|---|---|---|---|

| E-Flare-2.7K | Simulated | Train | 2,545 | Large-scale simulated training set. Each sample is a 20ms voxel grid. |

| Test | 175 | |||

| E-Flare-R | Real-world | Test | 150 | Real-world paired test set for sim-to-real evaluation. |

| DSEC-Flare | Real-world | — | — | Curated sequences from DSEC showcasing lens flare in public datasets. |

E-Flare-2.7K provides large-scale simulated data with 2,700 paired samples split into 2,545 training and 175 testing pairs. E-Flare-R offers 150 real-world paired samples captured using our novel optical system for sim-to-real validation. DSEC-Flare demonstrates the widespread presence of lens flare in the popular DSEC benchmark.

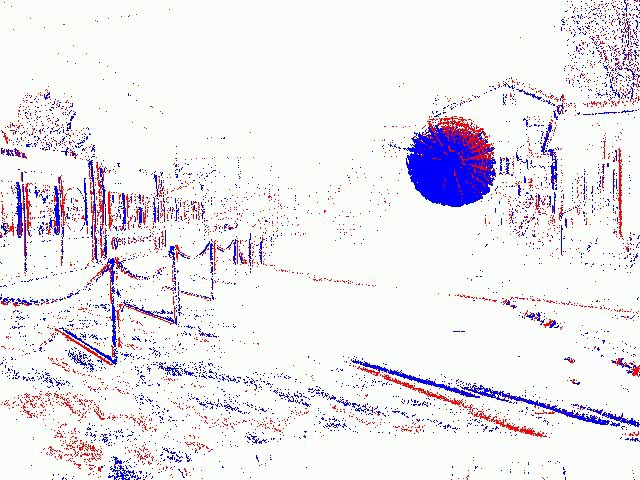

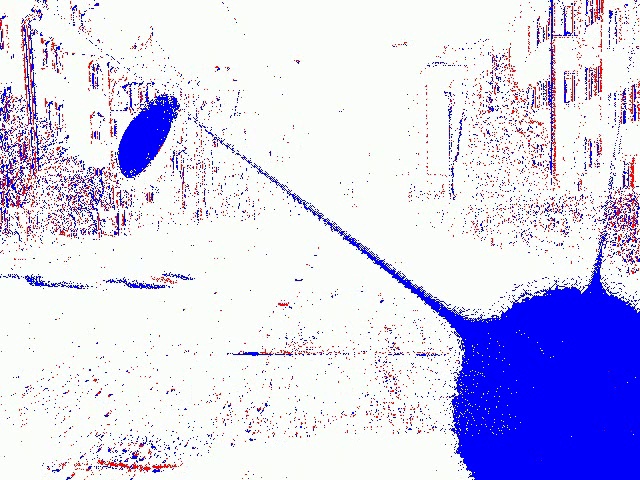

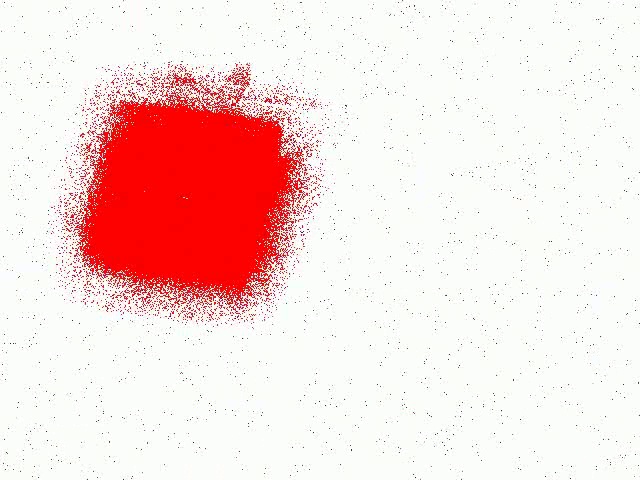

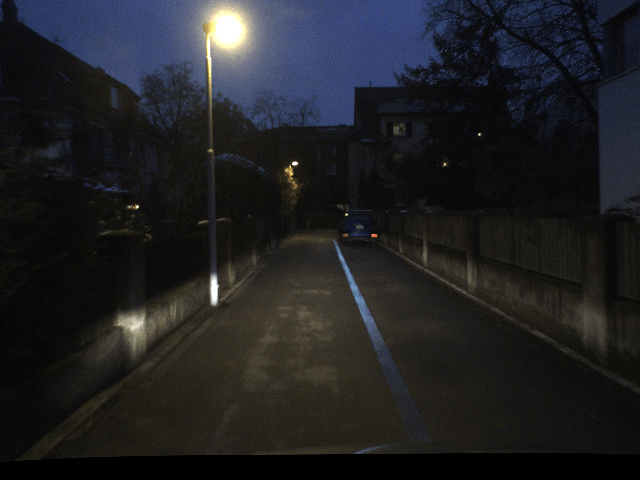

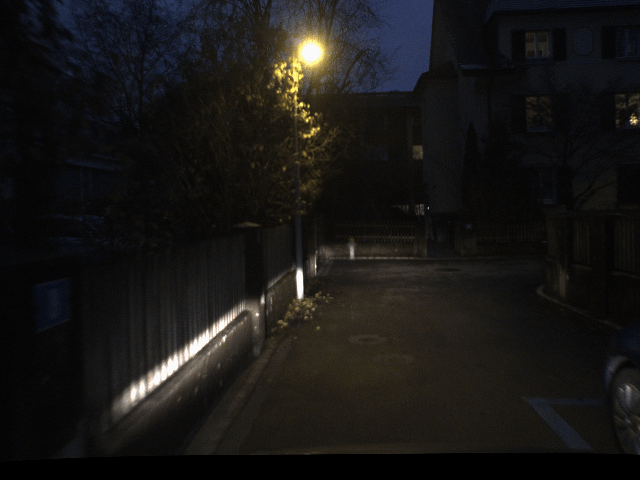

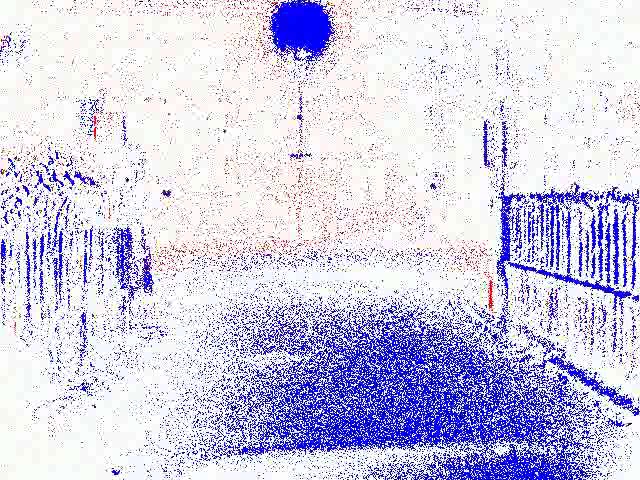

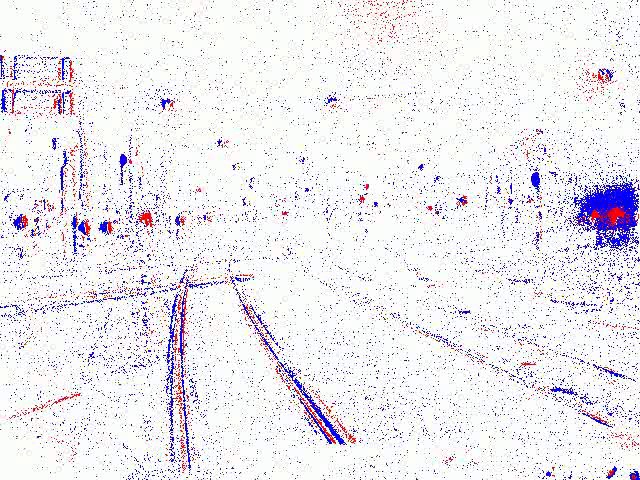

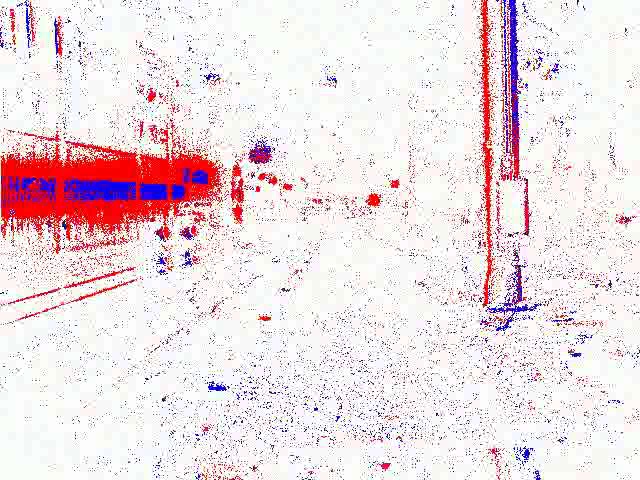

Below we showcase examples from our three datasets. Each row displays a pair of images in a two-column layout.

Input (with flare)

Target (clean)

Input (with flare)

Target (clean)

Event Stream (with flare)

RGB Frame

Quantitative comparisons on the E-Flare-2.7K test set. We evaluate against five event-based de-flaring baselines using event-level and voxel-level metrics. ● Raw Input, ● Second-Best, and ● Best results.

| Method | Chamfer↓ | Gaussian↓ | MSE↓ | PMSE 2↓ | PMSE 4↓ | R-F1↑ | T-F1↑ | TP-F1↑ |

|---|---|---|---|---|---|---|---|---|

| ● Raw Input | 1.3555 | 0.5222 | 0.8315 | 0.3666 | 0.3430 | 0.5138 | 0.6303 | 0.6939 |

| ● EFR | 1.3237 | 0.5439 | 0.5357 | 0.2019 | 0.1755 | 0.1616 | 0.3572 | 0.4811 |

| ● PFD-A | 1.3958 | 0.5397 | 0.8357 | 0.3637 | 0.3392 | 0.4383 | 0.5496 | 0.6125 |

| ● PFD-B | 1.2496 | 0.4613 | 0.2851 | 0.1159 | 0.1021 | 0.4460 | 0.5155 | 0.5727 |

| ● Voxel Transform | 1.2923 | 0.5576 | 0.3495 | 0.1037 | 0.0829 | 0.2177 | 0.5444 | 0.6318 |

| ● E-DeflareNet (Ours) | 0.4477 | 0.2646 | 0.1269 | 0.0487 | 0.0435 | 0.7071 | 0.7627 | 0.7794 |

| Improvement (%) | 64.2%↑ | 42.6%↑ | 55.5%↑ | 53.0%↑ | 47.5%↑ | 58.5%↑ | 38.8%↑ | 23.4%↑ |

↓ lower is better, ↑ higher is better. The final row shows the percentage improvement of our method over the second-best.

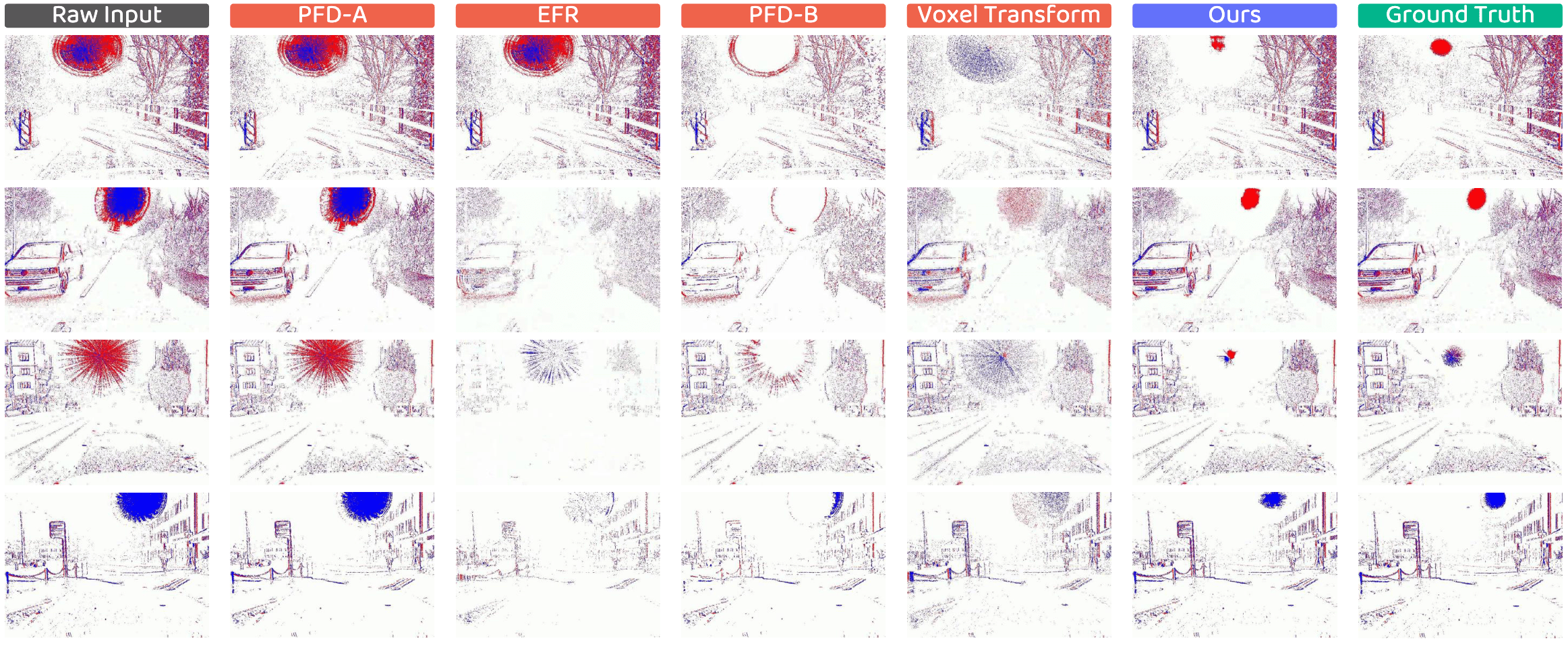

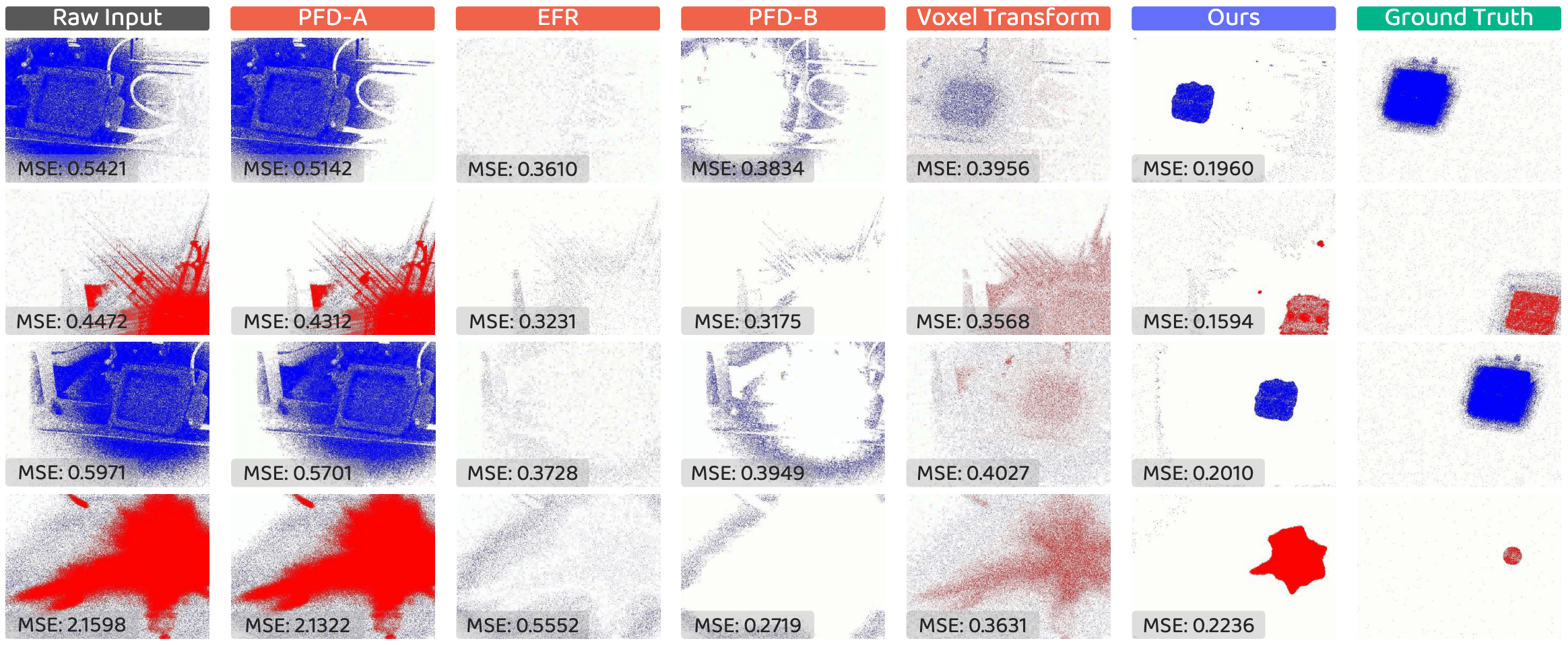

Figure. Qualitative assessments on E-Flare-2.7K. Each column shows the output of a different method applied to the same flare-corrupted input. The rightmost column displays the ground truth for reference. Best viewed in color and zoomed-in for details.

Quantitative comparisons on the E-Flare-R dataset. We evaluate against five event-based de-flaring baselines using event-level and voxel-level metrics. ● Raw Input, ● Second-Best, and ● Best results.

| Method | Chamfer↓ | Gaussian↓ | MSE↓ | PMSE 2↓ | PMSE 4↓ | R-F1↑ | T-F1↑ | TP-F1↑ |

|---|---|---|---|---|---|---|---|---|

| ● Raw Input | 1.7855 | 0.7170 | 0.8158 | 0.3431 | 0.3266 | 0.2299 | 0.2915 | 0.3093 |

| ● EFR | 1.8191 | 0.7266 | 0.3388 | 0.1403 | 0.1330 | 0.0839 | 0.1807 | 0.2372 |

| ● PFD-A | 1.7642 | 0.7067 | 0.7924 | 0.3341 | 0.3182 | 0.2386 | 0.3081 | 0.3283 |

| ● PFD-B | 2.0838 | 0.8504 | 0.2759 | 0.1121 | 0.1049 | 0.0300 | 0.0717 | 0.0880 |

| ● Voxel Transform | 1.9535 | 0.8167 | 0.3140 | 0.0964 | 0.0836 | 0.1036 | 0.2729 | 0.3255 |

| ● E-DeflareNet (Ours) | 1.1368 | 0.4651 | 0.1741 | 0.0690 | 0.0644 | 0.3498 | 0.3386 | 0.3011 |

| Improvement (%) | 35.6%↑ | 34.2%↑ | 36.9%↑ | 28.4%↑ | 23.0%↑ | 46.6%↑ | 9.9%↑ | -8.3%↓ |

↓ lower is better, ↑ higher is better. The final row shows the percentage improvement of our method over the second-best.

Figure. Qualitative assessments on E-Flare-R. Each column shows the output of a different method applied to the same flare-corrupted input. The rightmost column displays the ground truth for reference. Best viewed in color and zoomed-in for details.

@article{han2025e-deflare,

title = {Learning to Remove Lens Flare in Event Camera},

author = {Haiqian Han and Lingdong Kong and Jianing Li and Ao Liang and Chengtao Zhu and Jiacheng Lyu and Lai Xing Ng and Xiangyang Ji and Wei Tsang Ooi and Benoit R. Cottereau},

journal = {arXiv preprint arXiv:2512.09016}

year = {2025}

}This work is under the programme DesCartes and is supported by the National Research Foundation, Prime Minister's Office, Singapore, under its Campus for Research Excellence and Technological Enterprise (CREATE) programme. This work is also supported by the Apple Scholars in AI/ML Ph.D. Fellowship program.